Meta Unveils AI That Monitors Other AI, Reducing Human Involvement in Development

Meta has recently introduced a groundbreaking AI technology designed to monitor and evaluate other AI models, reducing the need for human involvement. This move is part of Meta’s broader ambition to push the boundaries of artificial intelligence and create more autonomous systems. So, what exactly is this new AI, and how does it work? Let’s dive in!

What is Meta’s Self-Taught Evaluator?

Meta’s new AI model, dubbed the Self-Taught Evaluator, is an innovative system that can monitor, evaluate, and improve the performance of other AI models, all without human intervention. This represents a major shift from the traditional methods of AI development, where human feedback has always been an essential part of the process.

The key benefit? It can dramatically speed up the AI training process, making it less reliant on expensive, time-consuming human annotation.

How Does It Work?

The Self-Taught Evaluator functions by breaking down complex tasks into logical steps, known as reasoning chains. It evaluates these steps to ensure the accuracy of its outputs, allowing the model to fine-tune itself over time. Meta’s team initially trained this model using AI-generated data, entirely removing human input from the training phase—a novel approach in the AI field.

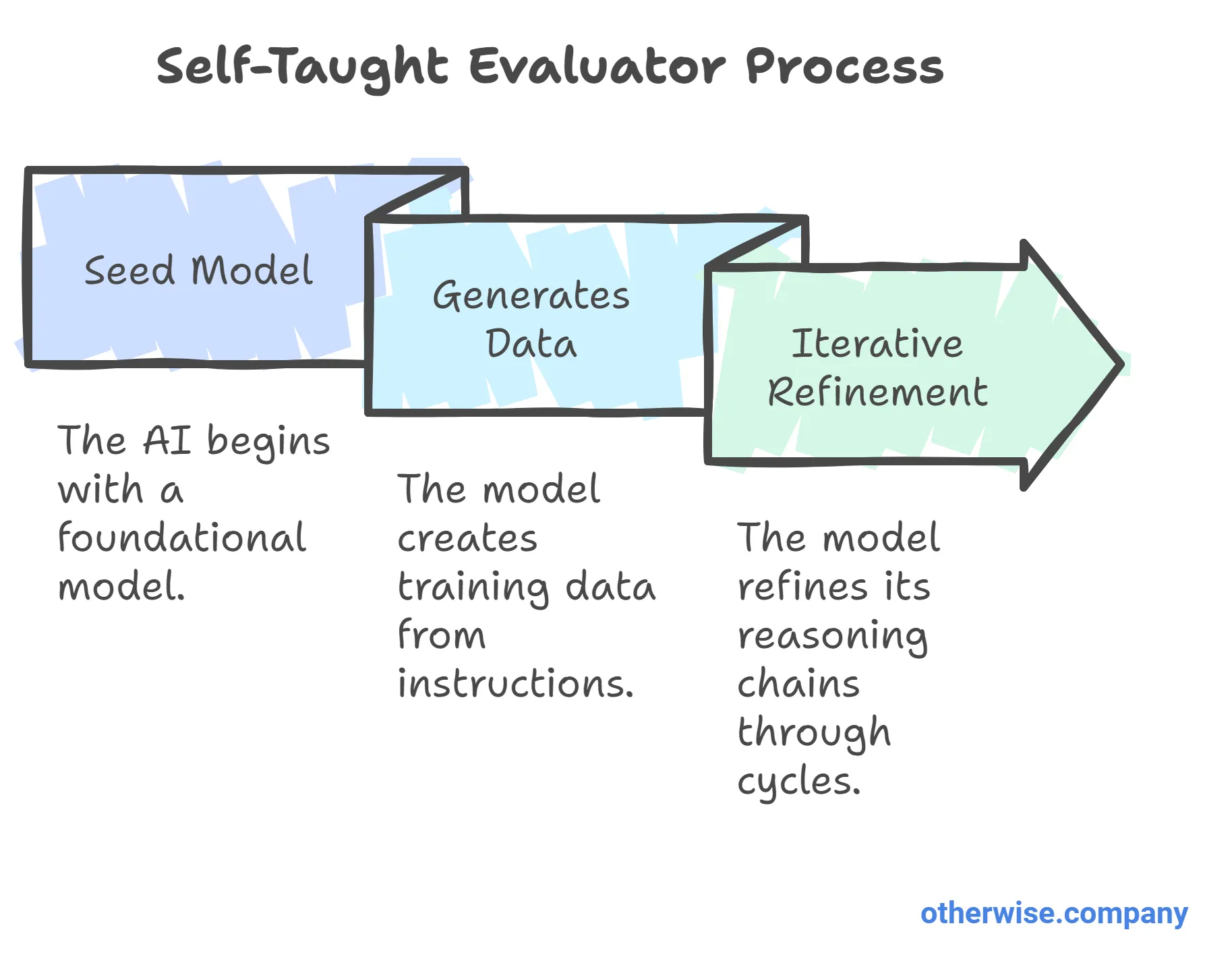

Here’s how the process works:

- Seed Model: The AI begins with a “seed” language model.

- Generates Data: It uses this seed to create training data based on instructions.

- Iterative Refinement: Through repeated cycles, the model improves its reasoning chains, eliminating flawed steps and adding accurate ones.

Key Advantages for AI Development

By reducing the reliance on human-generated feedback, Meta’s Self-Taught Evaluator offers several key benefits:

- Cost Efficiency: Human feedback, such as reinforcement learning from human feedback (RLHF), is costly and time-consuming. Automating this process can save significant resources.

- Faster Development Cycles: The model can iterate on its own without waiting for human annotators to provide feedback, which accelerates the development of new AI models.

- Scalability: Enterprises can now build and fine-tune large language models (LLMs) much faster, making it easier to deploy AI solutions in real-world applications.

Meta's Vision: A World With Less Human Oversight

Meta’s AI team believes that the Self-Taught Evaluator could one day pave the way for fully autonomous AI systems. This is especially exciting for industries where AI needs to operate independently, such as in digital assistants, automated customer service, or even research and development tasks.

The ability to teach itself and improve from its own mistakes is seen as a critical step toward creating “super-human” AI. Meta researcher Jason Weston explains, “The idea of being self-taught and able to self-evaluate is basically crucial to the idea of getting to this sort of super-human level of AI.”

The Broader Impact on the AI Industry

Meta isn’t the only company exploring this concept. Other tech giants like Google and Anthropic have also been experimenting with similar AI feedback methods. However, what sets Meta apart is their decision to release these models for public use, enabling broader access and innovation.

This move is expected to have a ripple effect across the AI industry, encouraging more companies to adopt AI solutions that can self-monitor and improve.

Traditional AI Development and Meta's Self-Taught Evaluator

|

Feature |

Traditional AI Development |

Meta’s Self-Taught Evaluator |

|

Human Involvement |

High |

Minimal |

|

Feedback Process |

Manual, human-annotated |

Automated, AI-generated |

|

Speed of Development |

Slower |

Faster |

|

Cost |

High |

Lower due to reduced labor costs |

|

Scalability |

Limited by human resources |

Highly scalable |

Challenges and Considerations

While the Self-Taught Evaluator presents promising advancements, it’s not without its challenges. One key consideration is the reliance on the initial seed model. If the seed model has inherent flaws, it could negatively impact the performance of the entire system. Therefore, selecting a high-quality seed model is crucial for successful implementation.

Moreover, while AI-generated data is faster, there’s always a need for caution when removing human oversight entirely, especially in sensitive areas like healthcare or law.

The Future of AI Development with Meta

As the Self-Taught Evaluator continues to evolve, Meta’s long-term vision is to create AI systems that require little to no human intervention, capable of learning and improving entirely on their own. This has the potential to revolutionize industries across the board, from customer service to scientific research.

Meta’s bold move in making their models publicly available could also lead to new partnerships, innovations, and applications, further cementing their position as a leader in AI technology.

Conclusion

Meta’s launch of the Self-Taught Evaluator is a significant leap forward in AI development, potentially reshaping the way companies build and maintain large language models. By reducing human involvement, accelerating development cycles, and creating scalable AI solutions, Meta is leading the charge toward a future where AI can monitor and improve itself—making autonomous AI systems more than just a futuristic dream.

Expect to see more widespread adoption of this technology as the industry continues to explore the incredible possibilities of AI-led development.

Prasanth Parameswaran

Prasanth Parameswaran